Red Hat AI includes:

Red Hat AI Inference Server optimizes model inference across the hybrid cloud for faster, cost-effective model deployments.

Powered by vLLM, it includes access to validated and optimized third-party models on Hugging Face. It also includes LLM compressor tools.

Red Hat Enterprise Linux® AI is a platform to consistently run large language models (LLMs) in individual server environments.

With the included Red Hat AI Inference Server, you get fast, cost-effective hybrid cloud inference, using vLLM to maximize throughput and minimize latency.

Plus, with features like image mode, you can consistently implement solutions at scale. It also lets you apply the same security profiles across Linux, uniting your team in a single workflow.

Include Red Hat AI Inference Server

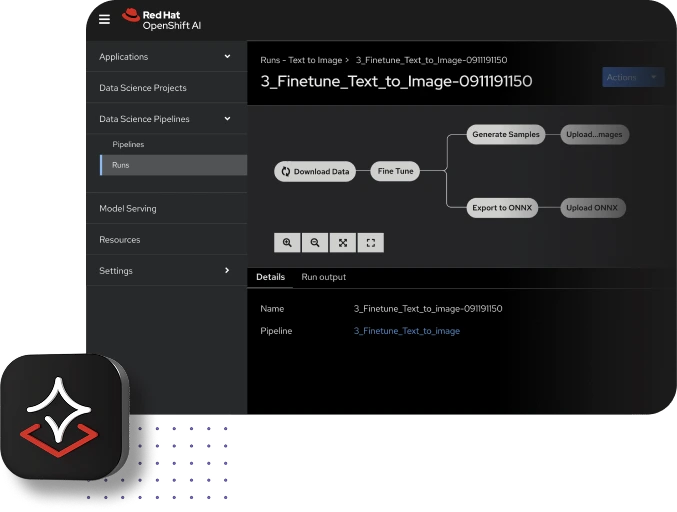

Red Hat OpenShift® AI builds on the capabilities of Red Hat OpenShift to provide a platform for managing the lifecycle of generative and predictive AI models at scale.

It provides production AI, enabling organizations to build, deploy and manage AI models and agents across hybrid cloud environments, including sovereign and private AI.

Includes Red Hat AI Inference Server

Includes Red Hat Enterprise Linux AI

Why Red Hat AI 3?

Red Hat AI 3 is purpose-built for environments where data locality, sovereignty, and compliance matter:

Unified foundation

Red Hat AI 3 combines OpenShift AI, Enterprise Linux AI and an AI inference server into one enterprise‑grade platform. This eliminates the complexity of managing disparate tools and allows organizations to move AI workloads from proofs‑of‑concept to production at scale

Hybrid & sovereign ready

The platform is designed for hybrid and multi‑vendor environments, supporting any model on any accelerator from data centers to public clouds and sovereign edge deployments

Open standards & openness

Red Hat AI 3 is built on open source components and open standards. A unified API layer based on Llama Stack and support for the emerging Model Context Protocol (MCP) ensure that agent‑based AI systems are interoperable and future‑proof

Distributed Inference & llm‑d

Inference — the stage where AI models generate responses — is often cost‑intensive. Red Hat AI 3 focuses on this “doing” phase by introducing llm‑d, a distributed inference engine built on the vLLM projec. llm‑d spreads large language models across multiple servers and uses Kubernetes‑aware scheduling to maximize GPU utilization. The result is predictable performance, improved efficiency and reduced cost per token

Key benefits of llm‑d include:

Disaggregated model serving for better performance per dollar

Inference‑aware load balancing that optimizes response times

Simplified deployment paths (“well‑lit paths”) that streamline the rollout of large models.

Model‑as‑a‑Service (MaaS) & Self‑Managed Gen AI

Red Hat AI 3 lets organizations become their own AI providers. Its Model‑as‑a‑Service (MaaS) capability exposes models as on‑demand endpoints within your own infrastructure. This approach gives IT teams cost control and allows AI use cases that can’t run on public clouds due to privacy or compliance concerns. With MaaS, platform teams can provide centrally managed model endpoints while tracking usage and maintaining internal governance.

AI Hub, Gen AI Studio & Curated Models

To foster collaboration, Red Hat AI 3 centralizes the entire AI lifecycle:

AI Hub – A lifecycle management and governance center where platform engineers control AI assets and monitor deployments.

Gen AI Studio – A hands‑on environment that lets developers experiment with models, tune parameters and prototype generative AI applications.

Curated model catalog – A registry of validated, optimized models, including popular open‑source LLMs like GPT‑OSS and DeepSeek‑R1 along with specialized models such as Whisper for speech‑to‑text and Voxtral Mini for voice‑enabled agent. Compressed versions of models allow organizations to run large models on fewer GPUs without sacrificing quality.

These features give platform engineers and AI developers a shared foundation, streamlining collaboration and making it easier to operationalize AI across data centers, public clouds and edge environments.

Preparing for Agentic AI

Red Hat AI 3 isn’t just about today’s workloads — it lays the groundwork for agent‑based AI systems. A unified API built on Llama Stack and early adoption of the Model Context Protocol ensure that next‑generation AI agents can interact with tools and data sources securely and efficiently. Additional customization tools (such as Docling for ingesting unstructured data) and a model‑customization toolkit expand Red Hat’s InstructLab initiative, enabling organizations to fine‑tune models using their own data

As a trusted integration partner, ComputingEra brings Red Hat AI 3 to organizations across Saudi Arabia. Our services include:

AI readiness assessments and architecture design

Deployment of Red Hat AI 3 on‑premises or in hybrid cloud environments

GPU enablement and infrastructure sizing

Integration with existing data platforms and MLOps pipelines

Ongoing support, training and optimization to keep your AI platform secure and efficient

AI at ComputingEra

At ComputingEra, Artificial Intelligence is not treated as an experiment or a standalone tool.

We help organizations adopt AI as an enterprise platform—secure, governed, and ready for production.

Our focus is on enabling AI where your data lives, under your control, and aligned with regulatory, security, and operational requirements.

Our AI Approach

We deliver AI as a platform, not just models:

AI designed for production, not labs

Full control over data, models, and infrastructure

Built for on-prem, hybrid, and sovereign environments

Integrated with existing enterprise systems

This approach allows organizations to scale AI confidently across departments and use cases.

Industries We Support

- Banking & Financial Services

Telecom & Technology

Healthcare & Life Sciences

Government & Public Sector

- Large Enterprises & Data-Driven Organizations

Enterprise-Grade by Design

Our AI platforms are built with:

Security & Governance

Role-based access, workload isolation, auditability

Scalability & Performance

GPU-enabled, Kubernetes-native architectures

Compliance & Sovereignty

Suitable for regulated and data-sensitive environments

Book Your Assessment & Consultation Today!

By combining Red Hat’s open source innovation with ComputingEra’s expertise in enterprise infrastructure and local regulatory requirements, we empower organizations to harness AI responsibly, cost‑effectively and at scale.

Schedule your session now and see how COMPUTINGERA can transform your business!

Ready to Explore Red Hat AI 3?

If you’re ready to move beyond AI experiments and build a scalable, secure AI platform, Red Hat AI 3 provides the foundation. Contact ComputingEra to learn how we can help you adopt Red Hat AI 3 for your next‑generation AI initiatives.